Ben Wright, March 21st 2024

This is the first of a two-part series describing the work Aniket Mahindrakar and I have been conducting as research assistants for the Mountain Legacy Project (MLP). For several years now the MLP has been utilizing machine-learning to assist with quantifying landscape changes. While this expedites a time-consuming task previously performed by hand, the computer generated results are not yet as accurate as those produced by the human eye. Our goal is to utilize deep learning to acheive both accuracy and efficiency. In this post I will write about the motivation for applying a form of machine learning that relies on convolutional neural networks (CNNs) to classify MLP images. In Part 2 I will go into further detail about the challenges of using the MLP images as a unified dataset and the specific implementations of CNNs that we are exploring.

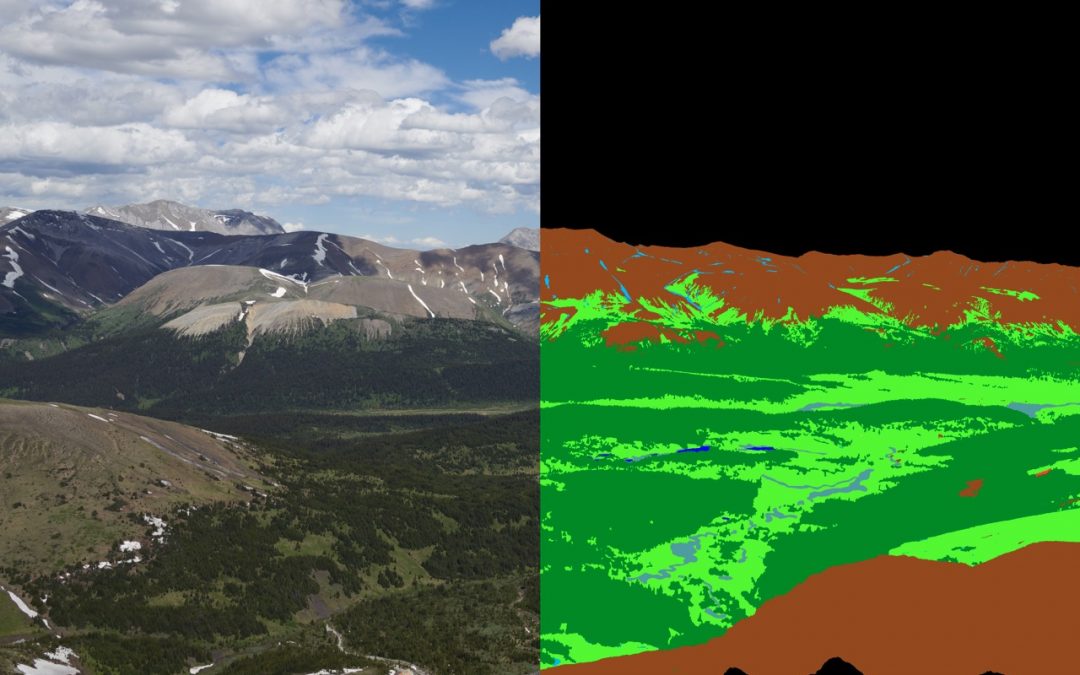

When I look at the MLP’s vast collection of historic and repeat images, I see not only a wealth of visual information but also an immense, hugely promising and largely untapped pool of raw data. With over 120 000 images and more than 10 million pixels in a typical image there are over 1 trillion unique pixels, each representing a single data point. One of the ways that each pixel can be represented is as the land cover type of the area that it depicts. We are currently working with nine categories, examples of which include conifer forest, broadleaf-mixed wood forest, herbaceous shrub and regenerating area. These various land cover types are overlaid pixel-wise on the image, as a land cover “mask.” By representing historic and repeat images in this way, they can be compared and information about changes in land cover over time can be extracted and analyzed.

In order to achieve this, however, each historic and repeat image to be studied must be digitally classified by hand down to the pixel scale. The detail contained in the images renders this task immensely painstaking and very slow, meaning that study areas and selected images must be very limited. Machine learning presents an alternative and promising pathway toward classifying MLP images and extracting important data. Convolutional neural networks (CNN), described below, are especially well suited to this task, and we are working hard to effectively implement this technology to be an efficient means of classifying land cover in MLP images.

A set of MLP images and land cover masks showing the scale and level of detail required in classification.

When the human eye perceives an image, incoming light is absorbed by tiny rods and cones which convert photons into electrical signals. These signals then cascade through a layered network of neurons, with each neuron outputting a signal based on a combination of the signals produced by those neurons connected to it in the prior layer. Neurons in the earliest layers are activated by simple stimuli, such as a sharp vertical line in the field of view, while neurons in deeper layers may be activated only by a single individual’s face, allowing us to recognize that person.

The objective of artificial neural networks is to replicate this structure using computation. Rather than electrical signals, numeric values are passed from one artificial neuron to the next, starting with the image pixel values in the input layer. At each neuron in the following layer a value is then calculated as a weighted function of the values from neurons connected to it in the previous layer. The values of neurons in the final layer represent the probability of outcomes being predicted by the network. In the case of MLP images this means the probability that each pixel represents a given land cover type. CNNs are distinct as a type of neural network in that the functions defining the values passed from one layer to the next are filters – much like those used in photo editing – which are applied to (or convolved with) the previous layer.

Demonstration of the operation of convolution. The filter is multiplied one element at a time with pixels in the input layer to determine the value in the output layer. The values in the filter are modified during model training.

To train the network to recognize land cover types, an image is passed into it and the network outputs a prediction. This prediction is then compared with a manually classified true land cover type mask of the image, and the values in the filters connecting layers of the network are updated in a way that would make the network more likely to predict the true mask. This is repeated hundreds or thousands of times, updating the filters only a small amount with each iteration so that the network is not too heavily biased toward the images it is trained on and can still generalize to other images, correctly assigning each pixel into one of nine categories. Training a network in this fashion takes a matter of hours or days and applying the resulting model to a single image to infer a mask takes just seconds or minutes, far outstripping the pace of manual classification.

So where do the challenges come in? MLP images are complex and pixel dense. While a high pixel count makes the photographs a remarkable source of data, this rather paradoxically adds complications when attempting to generate an accurate mask. Part 2 of this series will discuss some of these complications in detail and describe our ongoing work toward implementing a CNN-based technology.