by Spencer Rose | Mar 4, 2021

The Mountain Legacy Project (MLP) collection is a vast visual record of ecosystem changes in Canada’s mountains. With more than 120,000 high-resolution historical photos spanning the 1860s through the 1950s, along with thousands of modern repeat photos, the collection is rich in ecological data ranging in scope from whole landscapes to individual species. But much of the MLP collection remains uncharted, which has motivated researchers to leverage advanced image analysis to classify and quantify features captured by the imagery. Using these data, researchers can then detect and track long-term ecosystem shifts on a large scale.

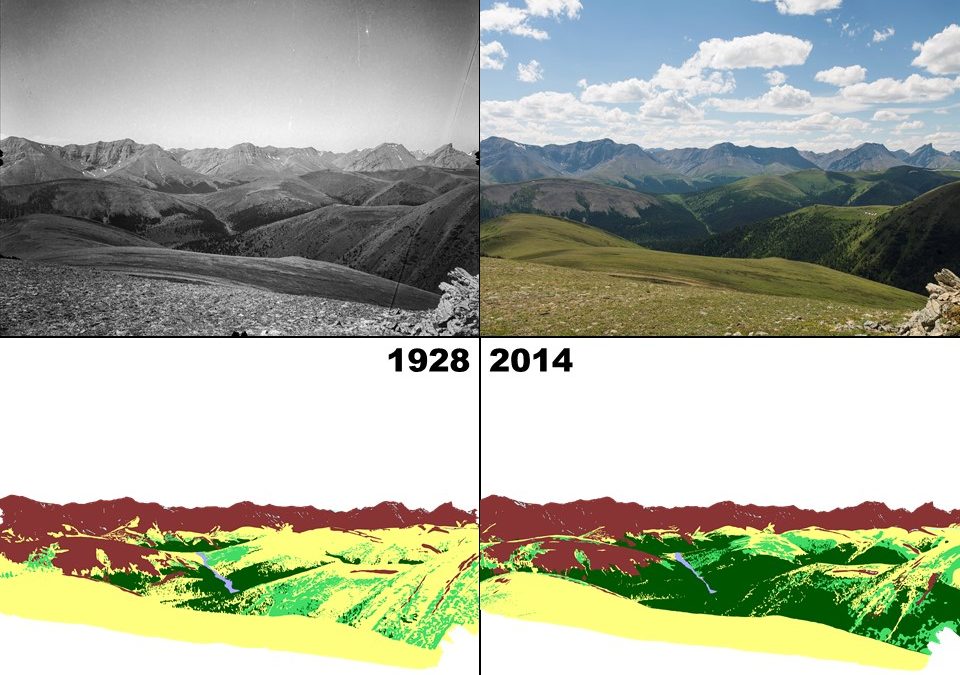

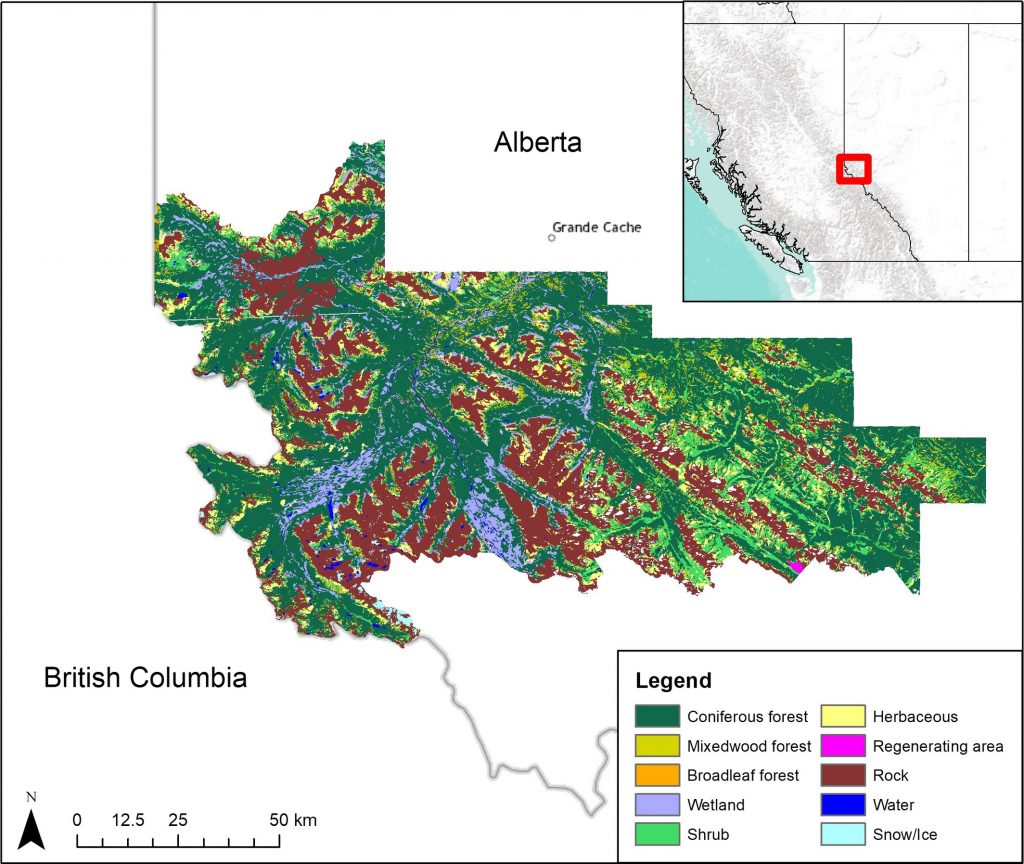

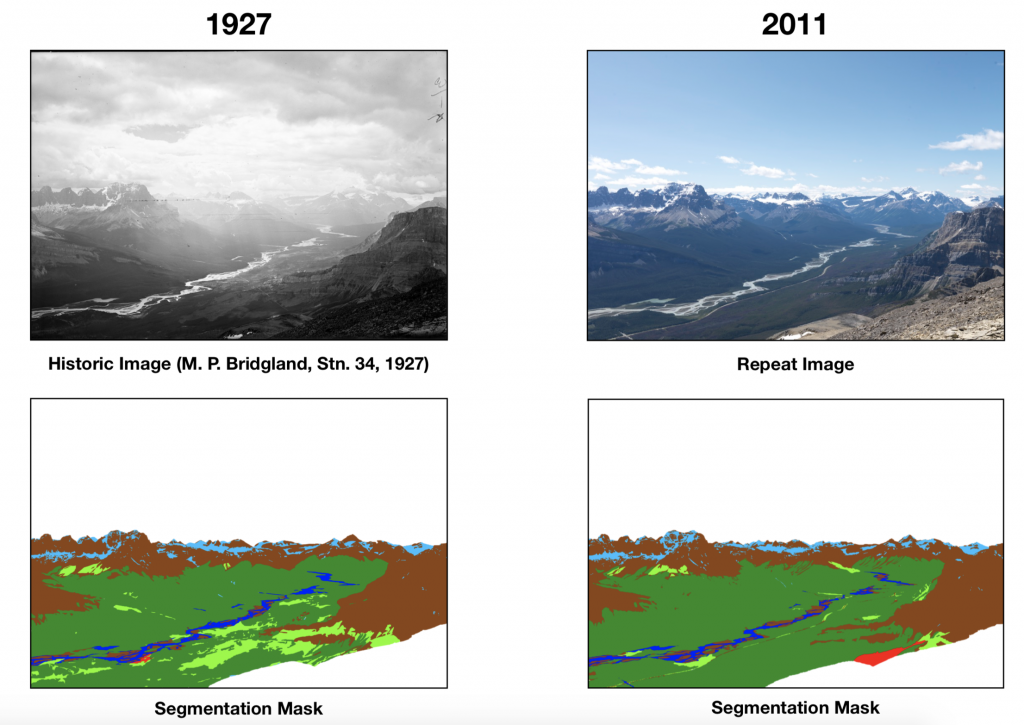

Recently, my master’s thesis research in Computer Science at the University of Victoria explored a new approach to classifying land cover of MLP images using some of the modern tools of artificial intelligence: deep learning convolutional neural networks. With the support of James Tricker, a doctoral student in UVic’s School of Environmental Studies, and UVic Research Associate, Mary Sanseverino, the study evaluated the application of state-of-the-art deep learning models to classify ecologically-distinct regions, or land cover types, such as rock, ice and snow, bodies of water, and different types of vegetation, within both historic and modern landscape images. Experimental results from the development and testing of a prototype landscape classification tool suggest deep learning may provide a key tool in the large-scale analysis of the MLP collection images.

Classification of different types of land cover is crucial for the study of ecological patterns or shifts over large regions. In this work, researchers predominantly use high-resolution remote sensing data to track changes, rather than ground-level, oblique repeat photography found in the MLP collection — that is, photos with an angled rather than orthogonal viewpoint. Remote sensing images captured by aerial and satellite sensors have proliferated over the past four decades and become the benchmark for ecosystem change detection. At the same time, deep convolutional neural networks, increasingly deployed to analyze complex image datasets, are emerging as an effective way to classify these images at scale.

Neural networks are a series of algorithms that roughly model neural structures found in the human brain. These algorithms allow for nonlinear information processing useful for pattern analysis, feature extraction, as well as classification of image data. Deep convolutional neural networks are composed of several processing filters or layers that apply a specific computation called “convolution” that sweeps across the image to incorporate the spatial context of image features and extract information. In our application, these filters first “learn” the characteristics of the training image dataset — i.e., the “ground-truth” data — under the constraint of correctly predicting land cover types. Using these trained filters, convolutional neural networks can then accurately identify objects and textures to generate high-resolution, pixel-level classifications. Deep learning models have been shown to be quite successful in extracting high-level feature information from remote-sensing images, and have been widely-used in the analysis of images with spatial resolutions of 10m or finer (Ma et al., 2019). Deep learning has been used comparatively little, however, in landscape classification of ground-level photography. My thesis work evaluated this less-explored approach.

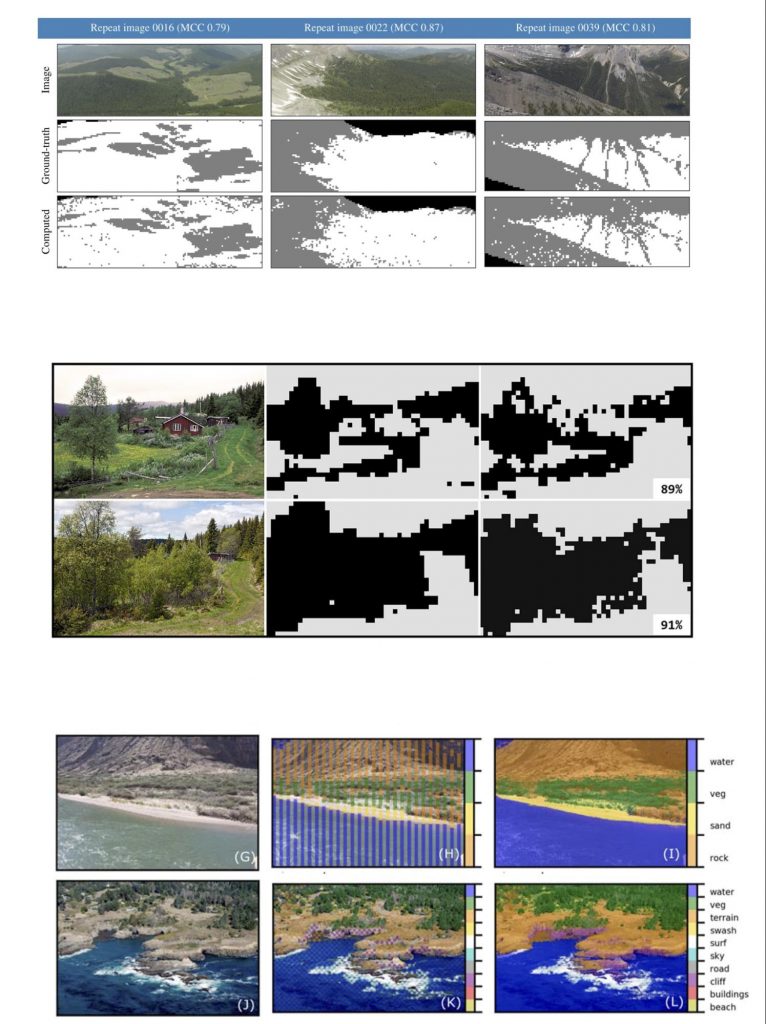

Recent research in landscape classification of oblique photography include the work of Frédéric Jean, a postdoctoral researcher at UVic, who developed a support vector machine classifier, as well as classifiers developed by Bayr and Puschmann (2019) and Buscombe and Ritchie (2018) — see example results below. In practice, though, landscape classification for oblique photographs is typically done by hand using a tablet and stylus or mouse — a time-consuming and labour-intensive process. Using manual techniques, classification of a large high-resolution image can take multiple hours. With an automated classifier, this might take only minutes. Automated classifiers therefore open up the possibility for more expansive studies of ecological patterns or shifts based on the classification of land cover over a broad scale. They would also allow researchers to incorporate more of the MLP collection into their quantitative research.

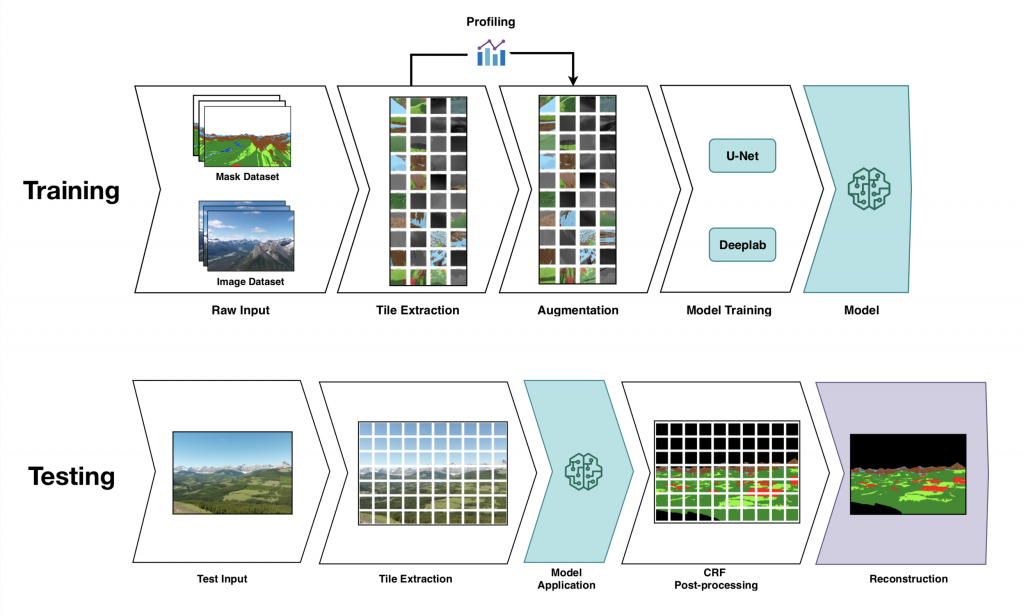

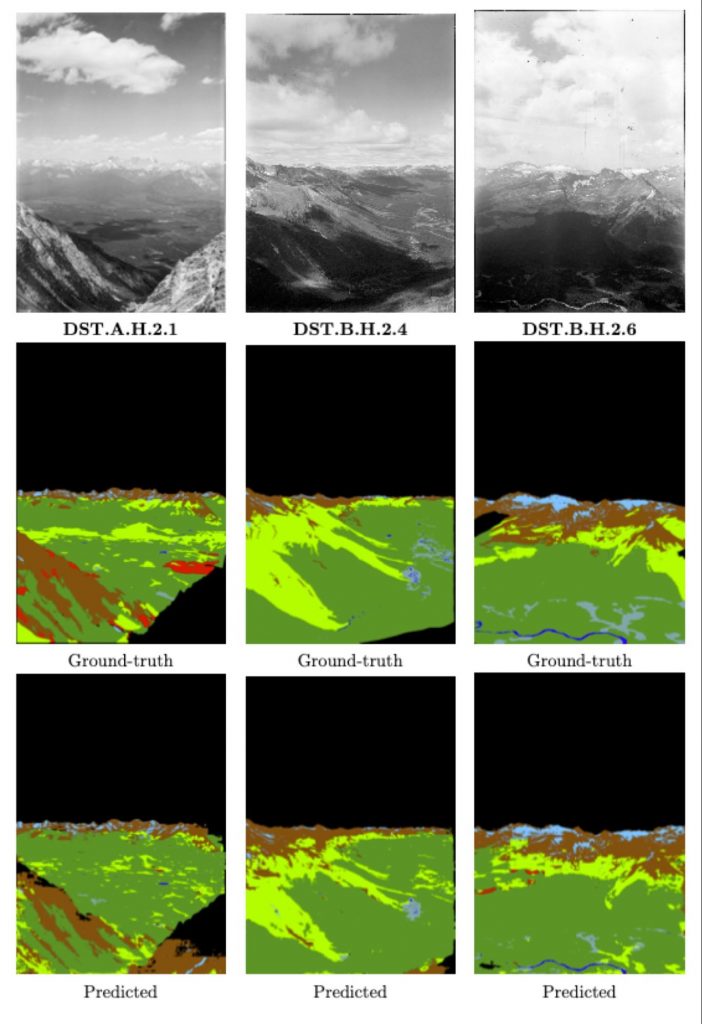

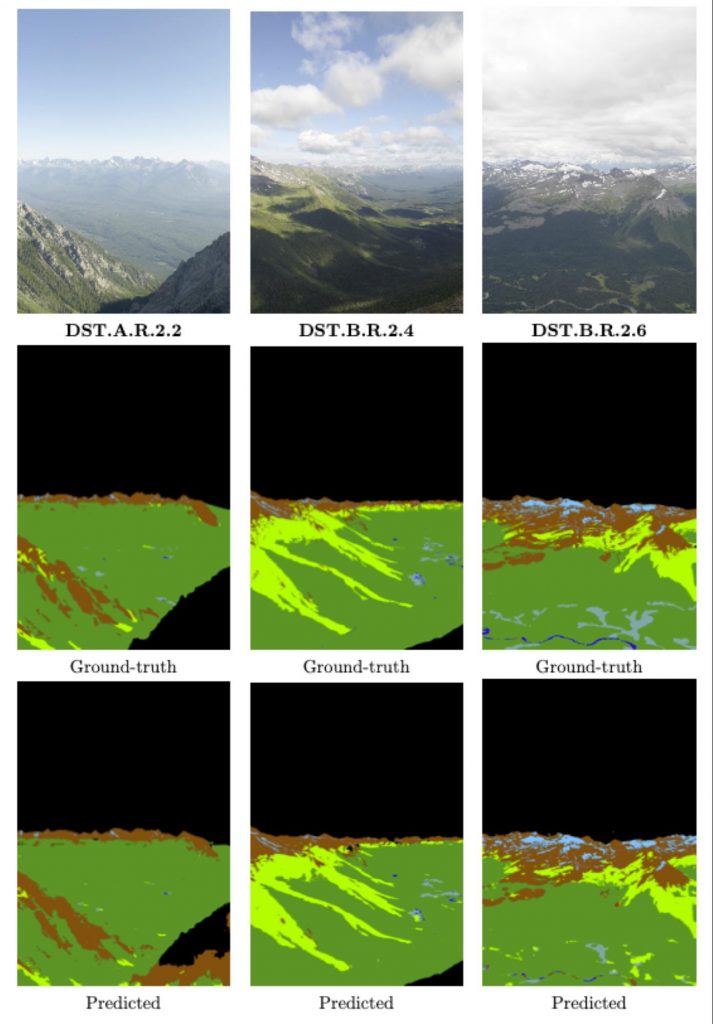

For my thesis work, I looked to recent developments in semantic segmentation, a core task in computer vision in which each pixel in an image is assigned a class or label. So-called “fully-convolutional” neural networks, developed in the last decade, have been successfully used in wide-ranging application areas including autonomous vehicles and the analysis of biomedical images. My prototype classifier used two such networks called U-net (Ronneberger et al. 2015) and Deeplab (Chen et al. 2017) with multiple enhancements, such as data augmentation (as shown in the workflow image above) to address specific challenges of the MLP collection dataset. Data augmentation is the process of extending the dataset with altered copies of the input data. In this case, the alterations include rotation, scaling, flipping, translation and changes to the brightness of the pixels. Details about augmentation and other enhancements are discussed at length in my thesis. The U-net and Deeplab models were trained on MLP images using corresponding manually-created segmentation masks repurposed from two previous MLP studies by Frédéric Jean (2015) and Julie Fortin (2019) as the ground-truth data. Example results are shown in the images below.

Overall, the study showed encouraging results that demonstrated the potential gains of using deep learning approaches to classify land cover information held in oblique photographs. Further research and development are still needed before putting it into wider practice, and at scale for the study of large and challenging datasets such as the MLP collection. Part of James Tricker’s research project will be to assess whether the deep learning model can generate maps of sufficient accuracy and detail to be used in landscape-scale change analyses. Other difficult challenges remain, the most significant of which is generating the large amount of training data needed for high-performance deep learning models. For this study, only 120 historic and repeat image pairs with ground-truth land cover maps were used — all manually created — which comprises a tiny fraction of the complete MLP collection. Still, deep learning will assuredly continue to evolve with improvements in speed, throughput (i.e., the rate of output) and performance. Landscape classification should likewise expect to benefit from ongoing advances in neural network architecture and algorithms, including better accuracy, reduced training times, and new ways to address the bottleneck of limited ground-truth data. These advances may provide the key to a wider exploration and use of the MLP image data to broaden our historical and scientific understanding, as well as appreciation of mountain landscapes.

REFERENCES

Bayr, U., & Puschmann, O. (2019). Automatic detection of woody vegetation in repeat landscape photographs using a convolutional neural network. Ecological Informatics, 50, 220-233.

Buscombe, D., & Ritchie, A. C. (2018). Landscape Classification with Deep Neural Networks. Geosciences, 8(7), 244. https://doi.org/10.3390/geosciences8070244

Chen, L.-C., Papandreou, G., Kokkinos, I., Murphy, K., & Yuille, A. L. (2017). DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. ArXiv:1606.00915 [Cs]. http://arxiv.org/abs/1606.00915

Fortin, J. A., Fisher, J. T., Rhemtulla, J. M., & Higgs, E. S. (2019). Estimates of landscape composition from terrestrial oblique photographs suggest homogenization of Rocky Mountain landscapes over the last century. Remote Sensing in Ecology and Conservation, 5(3), 224–236. https://doi.org/10.1002/rse2.100

Jean, F., Albu, A. B., Capson, D., Higgs, E., Fisher, J. T., & Starzomski, B. M. (2015). The Mountain Habitats Segmentation and Change Detection Dataset. 2015 IEEE Winter Conference on Applications of Computer Vision, 603–609. https://doi.org/10.1109/WACV.2015.86

Ma, L., Liu, Y., Zhang, X., Ye, Y., Yin, G., & Johnson, B. A. (2019). Deep learning in remote sensing applications: A meta-analysis and review. ISPRS journal of photogrammetry and remote sensing, 152, 166-177.