By Julie Fortin and Michael Whitney

[This post also appears on the Landscapes in Motion blog]

The Mountain Legacy Project often uses the term “oblique photo” to describe the images we deal with, but what exactly does “oblique photo” mean?

Typically, people studying landscapes use images taken from satellites or airplanes. These “bird’s eye view” images are nice because they are almost perfectly perpendicular to the Earth’s surface—the kind of image you get when you switch to “Satellite View” on Google Maps. Each pixel corresponds to a fixed area (e.g. 30 m by 30 m) and represents a specific real-world location (e.g. a latitude and longitude).

Example of a satellite image. Landsat 8, path 42 row 25, August 22nd 2018. This image cuts through Calgary at the top and reaches south past Crowsnest Pass. Each pixel in this satellite image corresponds to a 30 m by 30 m square on the ground. Image courtesy USGS Earth Explorer.

The Mountain Legacy Project (MLP), on the other hand, deals with historical and repeat photographs. These photos are taken from the ground, at an “oblique” angle—like switching to “Street View” on Google Maps. Pixels closer to the camera represent a smaller area than pixels farther away, and it is difficult to know the exact real-world location of each pixel on a map.

Difficult, but not impossible.

Example of an oblique image. This photograph of Crowsnest Mountain was taken in 1913 by surveyor Morrison Parsons Bridgland, from Sentry Mountain, and was repeated by the Mountain Legacy Project in 2006. Photo courtesy of the Mountain Legacy Project.

Given the incredible time range captured by MLP photo pairs—the historical photos were captured decades before satellite imagery became available—it is worth overcoming this challenge to study land cover change. It would be especially awesome if we could estimate areas of different land covers (e.g. evergreen forest or open meadow) as captured by the pictures. Thus, we have taken on the difficult but not impossible challenge of developing a tool to assign real-world locations to each pixel of an oblique photograph.

Several similar tools already exist (see for example the WSL Monoplotting Tool, JUKE method, PCI Geomatica Orthoengine, Barista, QGIS Pic2Map). However, many of these tools require pretty heavy user input: for instance, someone has to sit at a computer and select corresponding points between oblique photos and 2D maps. Given the vastness of the MLP collection, this could take years. So, we are building a way to automate the process, making it fast, scalable and accurate.

“How will you do this,” you ask? A little bit of elevation data, a little bit of camera information, a little bit of math and a whole lot of programming.

The Tool

The tool we are developing is a part of the MLP’s Image Analysis Toolkit. It is a web-based program in Javascript.

To use it, we need a Digital Elevation Model (DEM). A DEM is a map in which each pixel has a number that represents its elevation. We also need to know camera position (latitude, longitude, elevation; i.e where it is) and orientation (azimuth, tilt and inclination; i.e. where it is facing), plus the camera’s field of view.

Example of a Digital Elevation Model. Darker colours indicate lower elevations, lighter colours indicate higher elevations. Image courtesy of Japan Aerospace Exploration Agency (©JAXA).

The Virtual Image

We start off by creating a “virtual image”: a silhouette of what can be seen from the specified camera location and orientation. To do so, we trace an imaginary line from each pixel in the DEM to the camera and see where it falls within the photo frame.

Example of a virtual image generated from a point atop Sentry Mountain, facing Crowsnest Mountain.

The Virtual Photograph

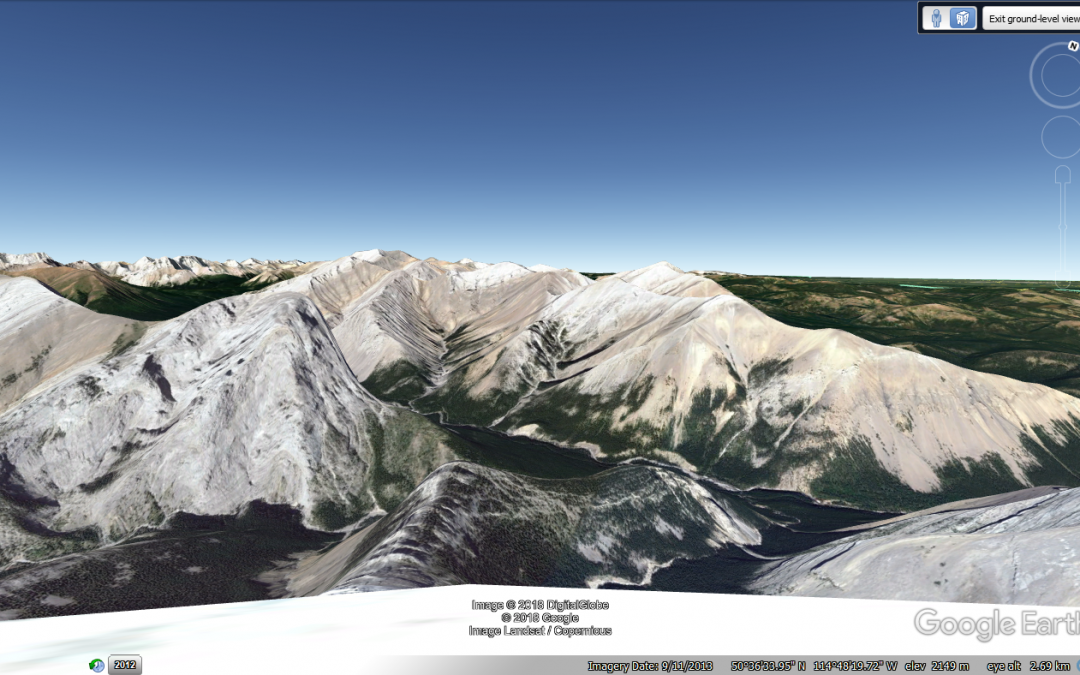

Have you ever zoomed into the mountains on Google Earth and switched to “ground-level view”? When that happens, Google Earth basically shows satellite images from an oblique angle. It does this in places that are remote and don’t have Street View photos.

With our “virtual photograph” tool, we do more or less the same thing. This step can be helpful for looking at areas where repeat photographs haven’t been taken.

Example of ground-level view in the mountains in Google Earth.

Remember how earlier we mapped DEM pixels onto a “virtual image”? We repeat the process, this time mapping satellite image pixels onto the virtual image. This creates what we call a “virtual photograph”, displaying the satellite image from an oblique view.

Example of a virtual photograph generated from satellite imagery for Crowsnest Mountain. Satellite imagery courtesy of Alberta Agriculture and Forestry.

Oblique Photographs and the Viewshed

This brings us to the point where we have a photograph (say, a historical or repeat photograph), and we want to calculate the area of different landscape types in the photo (e.g., a forest or meadow). To do this, we need to know the real-world location of each pixel in the oblique photo.

We use math to map out where each oblique photo pixel belongs on the DEM. This is like the reverse of the “virtual image” from earlier. This creates a map, which we call a “viewshed”, showing the parts of the landscape that are actually visible within the photograph. (In the DEM, which sees the landscape from above, we can see the back of the mountain. But in the photograph we can’t. By creating the viewshed, we avoid trying to assign values to part of the landscape that are hidden from view.)

Example of a viewshed. Each blue pixel should be visible from the given camera location & orientation. Each white pixel cannot be seen in the oblique photo view. (Green pixels represent the foreground and black pixels are outside of the camera’s field of view).

Now we know where each pixel from an oblique photo gets mapped to on the DEM—so we know where each pixel of the photo exists on the ground! And, because we know that each pixel of the DEM is of a fixed size (e.g. 5 m by 5 m), we can estimate the area covered by oblique photo pixels.

This process will allow us to take a historical or repeat photo and compute the true area covered by different forest types, meadows, burned forest, and more.

Left: the same historical photograph as above of Crowsnest Mountain, this time with different land cover types drawn onto it (rock in red, alpine meadow in yellow, forest in green). Right: the accompanying viewshed, showing where those coloured areas exist in 2D. In the viewshed image, we can count how many pixels are red, yellow or green, multiply those numbers by 25 (because each pixel measures 5 m by 5 m), and estimate the area covered by rock, alpine meadow or forest.

What next?

The MLP team is currently ironing out some kinks in the tool such as accounting for the curvature of the Earth. But soon, this tool will allow us to map out historical and repeat photos. This means we can measure the extent and spatial location of different land covers, we can quantify where and how much change occurred between two photos, we can assess coverage of entire surveys in the MLP collection.

Where we go from there depends only on the questions we seek to answer from the photos.

Many thanks to Sonya Odsen with the Landscapes in Motion Outreach and Engagement Team for her help making this piece more digestible!